Introduction

Image-guided radiotherapy aims to increase the accuracy of radiation therapy to deliver the optimal dose to the patient. MRIdian, a magnetic resonance (MR) image guided radiation therapy system (Viewray Inc., Oakwood, OH, USA), not only prevents additional radiation exposure other than the dose delivered to the patient, but also enables adaptive treatment in real-time by taking into account of the changes in both tumor and surrounding organ at risk [1, 2]. However, MR imaging is fundamentally different from computed tomography (CT) imaging. Radiation dose calculation is not possible as the MR image is devoid of electron density information required for dose calculation [3]. To solve this problem, image matching algorithm with deformable registration between MR and CT was developed. However, volumetric changes during image registration limits calculation of actual radiation dose delivered [4, 5]. In this respect, synthetic CT generated from the MR image would provide information required for the real time RT planning with actual dose calculation. For this purpose, we aimed to develop a synthetic CT generation algorithm using the deep learning method. MR images obtained from the MR imaging radiotherapy system was compared to deformed planning CT to build model for synthetic CT image generation [6–8]. Generated synthetic CT images were then compared to deformed CT.

Materials and Methods

We analyzed 1,209 MR images from 16 patients who underwent MR image guided radiotherapy from December 2015 to August 2017. This study was approved by the Institutional Review Board prior to initiation (approval No. 1708-051-876).

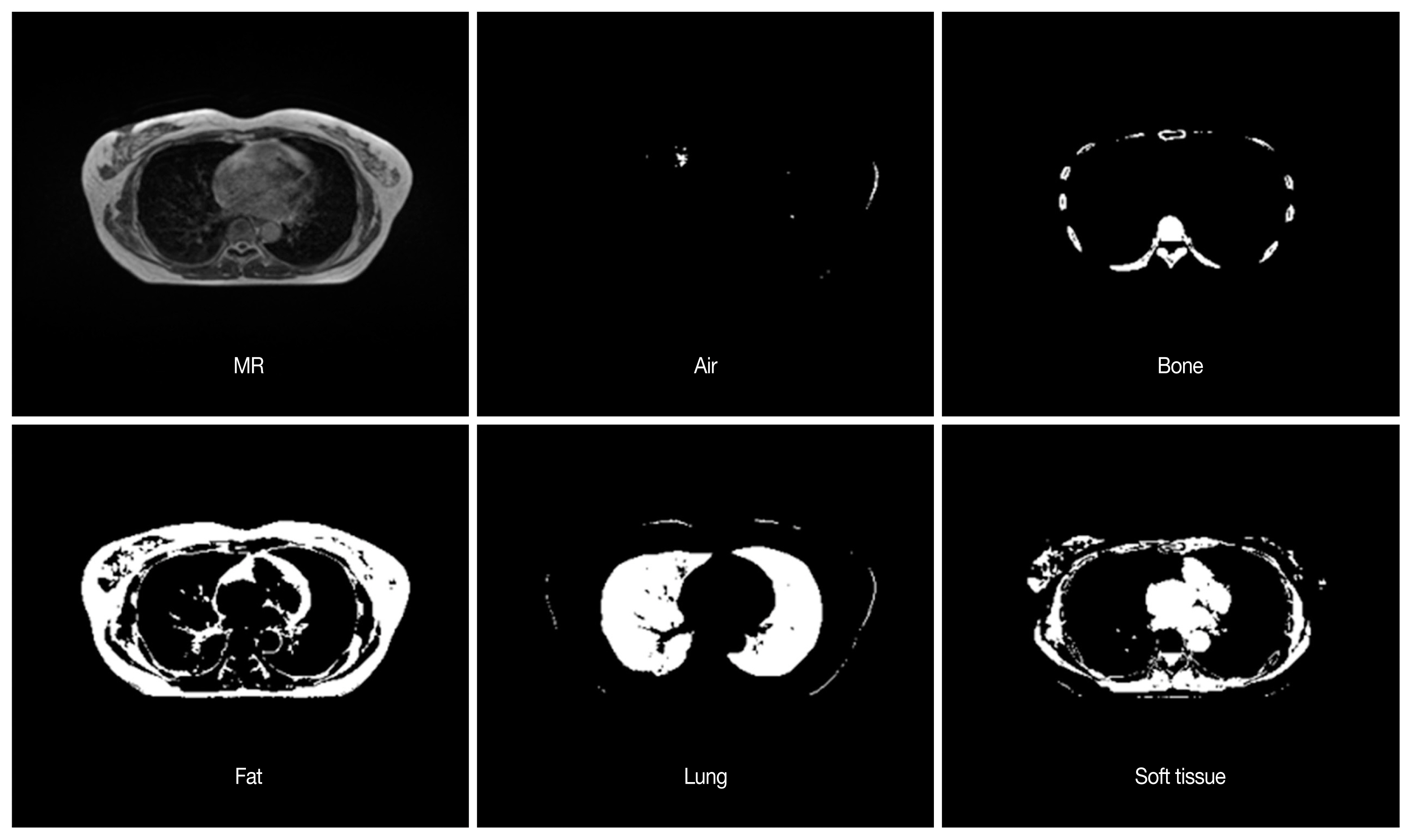

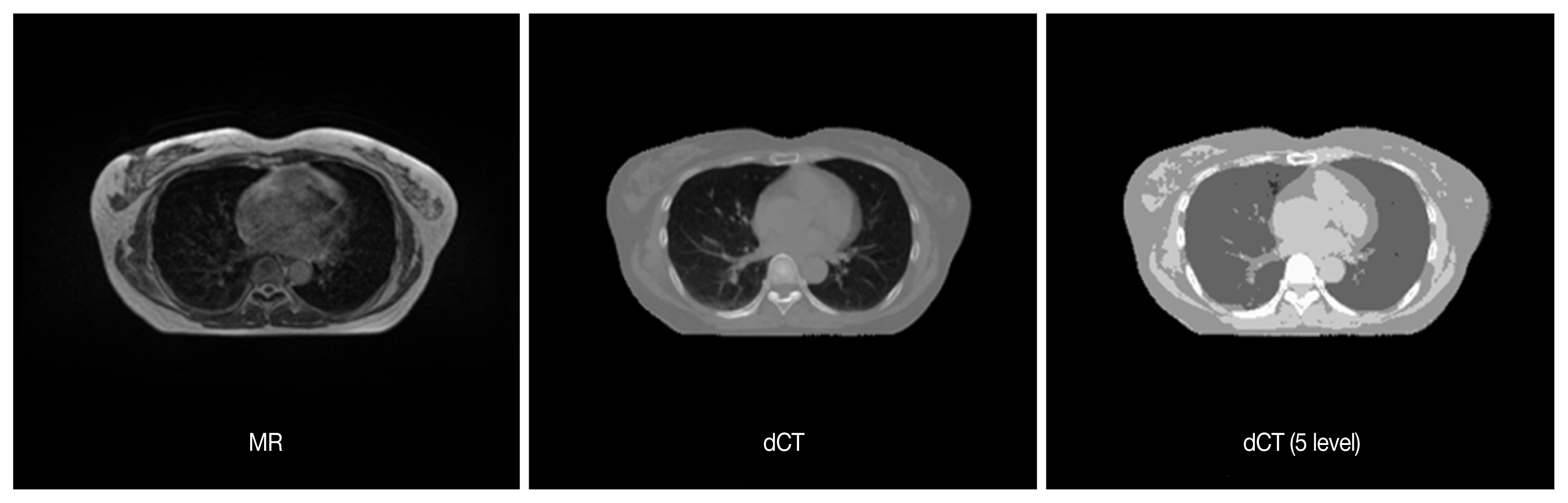

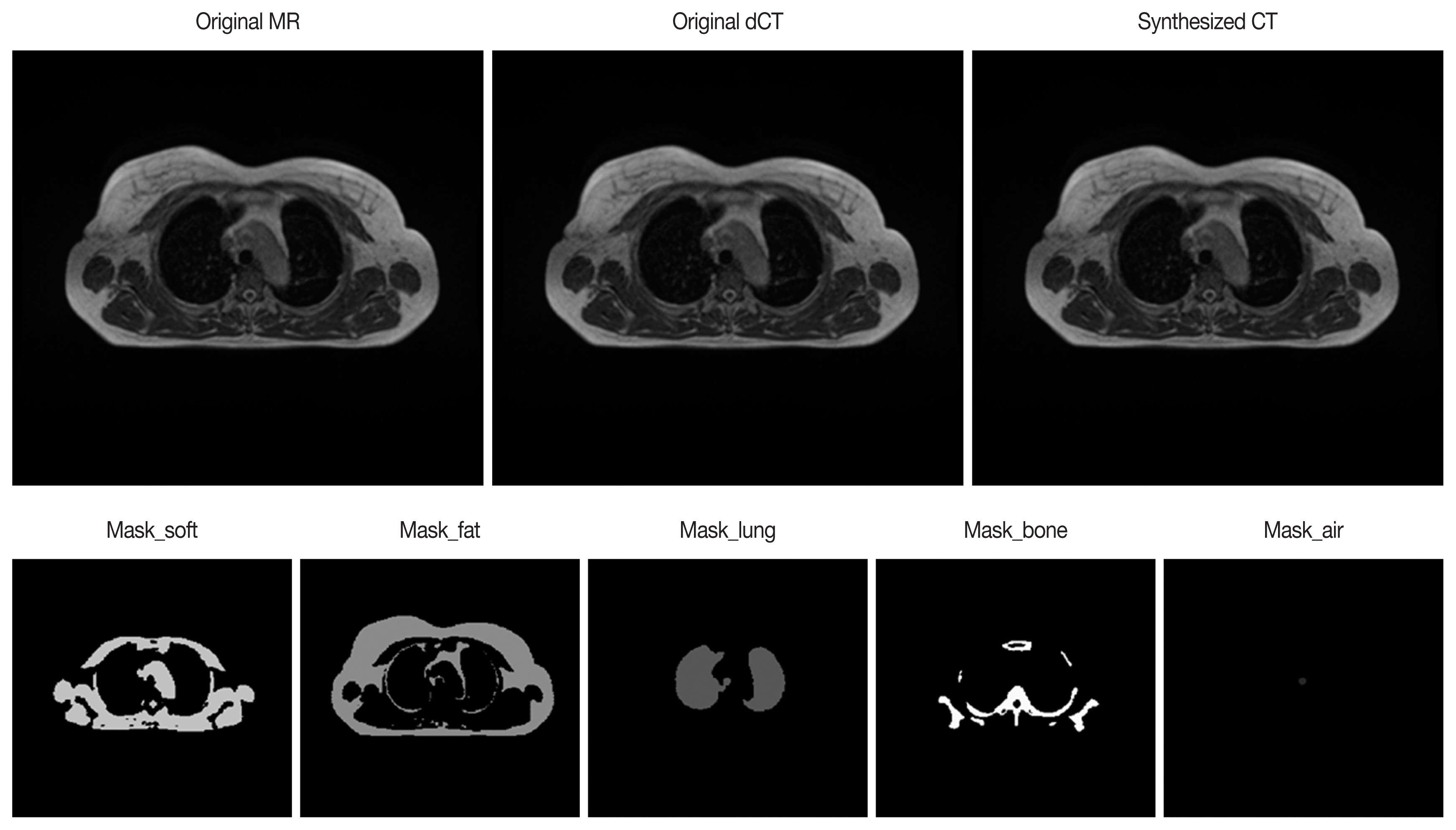

Each MR image was learned through U-net Convolutional Networks for Biomedical Image Segmentation model [9]. In the MR images, blurred image slices at edge along the axial dimension were excluded from each patient dataset. CT images were aligned to the corresponding MR images through deformable registration provided by ViewRay treatment planning system. No manual correction was performed. After registration process, these deformed CT (dCT) images were resampled to the same size of MR images. The accuracy of image registration was visually compared by the board-certified radiation oncologist, and it was excluded from the dataset when the mismatch occurred in the outer and inner contour of patient body. Algorithm generation and validation were performed for patients with deformed image matching compared to pretreatment CT for each patient. The relationship between MR images and CT images was evaluated according to the CT Hounsfield unit (HU) reference values, such as air (−100 to −900), bone (150 to 1,200), fat (−400 to 10), lung (−900 to 400), and soft tissues (−10 to 150). Based on the above criteria, deformed CT (dCT) was generated from the MR image. Since the magnitude of the MR image may vary depending on the imaging device, the local maximum value for each image and the global maximum value for the pixel acquired from the MR image of the entire specific patient data was obtained, and the normalization process was performed therefrom. Computed tomography images were pre-processed to remove the outer part of the CT image, such as frames and immobilization devices based on the area of the MR image. DCT images were then separated into five tissue types based on the CT number (Figure 1). As the CT number is a continuous value, noise was included. A batch program was used to segregate dCT images. Separated images were then synthesized and reconstructed to build dCT images separated into five different tissues, dCT (5 level), and then compared with original MR images, and original dCT images (Figure 2).

Patient’s Characteristics

Sixteen patients diagnosed with breast cancer and received MR guided breast radiotherapy were analyzed for synthetic CT generation. MR images of each patient covered the entire lung, and axial images were acquired at 3 mm intervals. A total of 1,209 MR images were obtained from 16 patients.

Deep learning was performed based on the U-net model using MR images of all patients. Fourteen patients were assigned training sets and 2 patients were validated with image data.

Deep learning based on U-net model

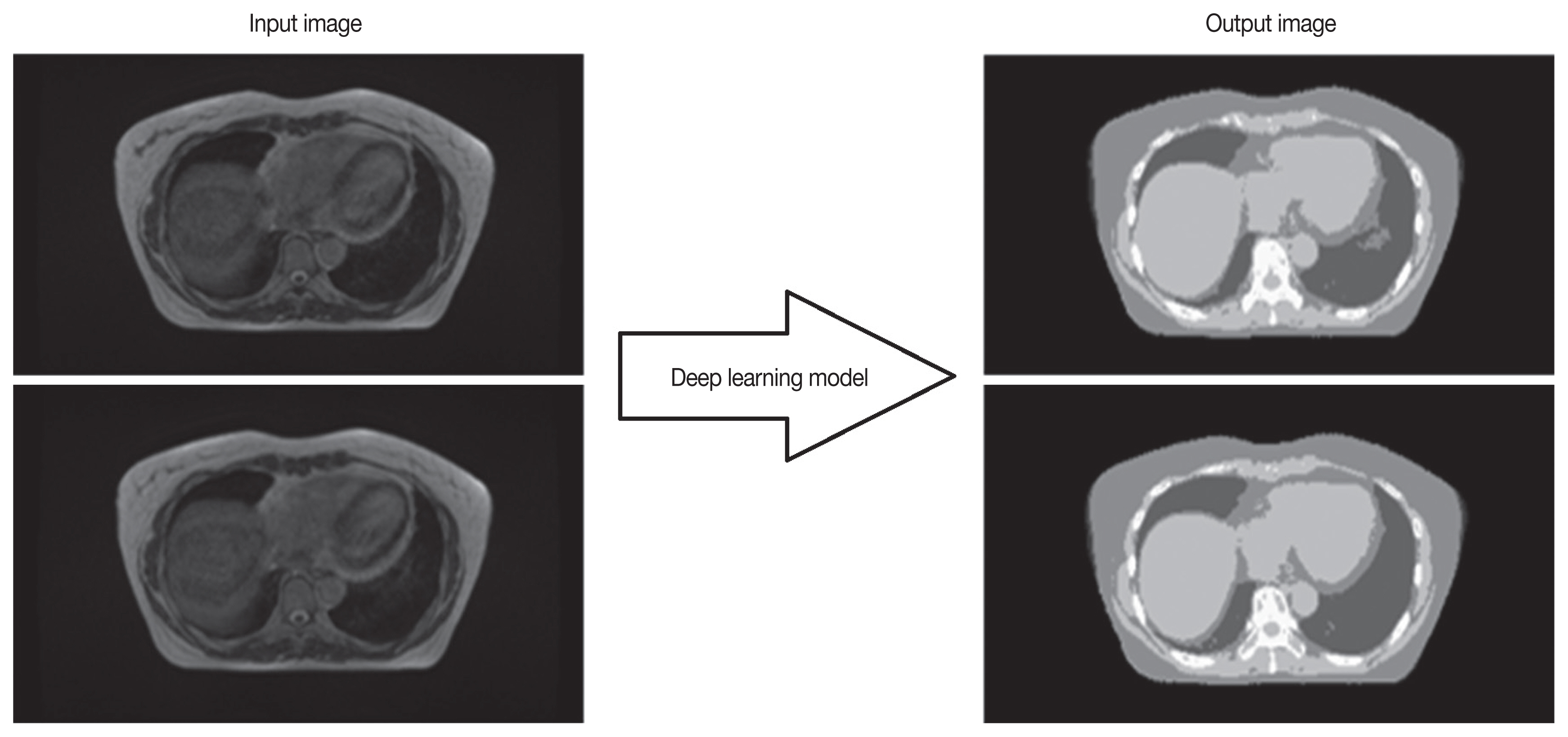

We generated five learning models that map five dCT tissue images based on one MR image. It is applied to the U-net model corresponding to the segmentation technique among the deep learning models and maps the MR image and CT regions of the tissue (Figure 3) Deep learning is based on the Keras framework with Theano Backend. In the database of 16 patients, 1,209 MR-dCT pairs were created, and the images were learned. The MR image is used as the input image, and the dCT image is designated as the mask image to model the relationship between the input and mask images. For lack of study data, the pre-trained network trained by MR/CT data set (https://github.com/ZFTurbo/ZF_UNET_224_Pretrained_Model/) was used according to transfer learning algorithm. The pre-trained networks were trained using the Adam optimizer with a learning rate of 0.0001 and dropout rate of 0.05. The binary cross-entropy was used as loss function. The batch size is set to 20.

The size of the image applied to the training was adjusted to 224×224 pixels, and the batch size was updated to 16 when the lowest loss value is within 200 epochs. To evaluate the performance of the learned model, we added air, lung, fat, soft tissue and bone data from more patients. In the training, we used the Dice coefficient as the pixel match rate to measure the loss function and the error for the backpropagation algorithm as shown below.

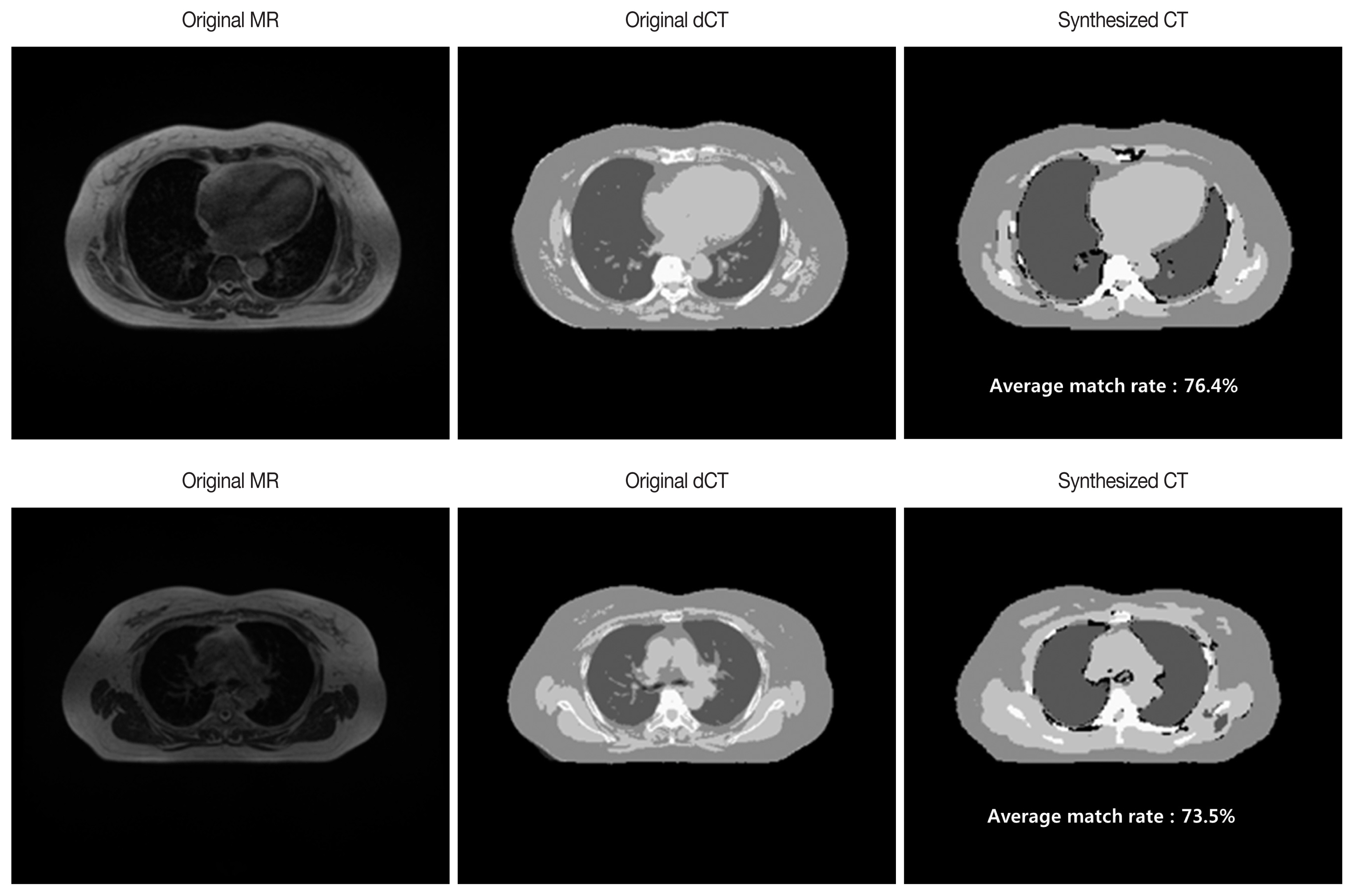

Using all five learned models, we generated five output mask images from arbitrary input MR images. When the mask images are combined and adjusted by each gray-scale, a synthetic CT as shown in Figure 4 is derived. Image match was evaluated by comparing the pixel values in the composite CT generated by the deep learning method to the original CT. Match rate of the image was calculated from pixel-to-pixel match of the synthetic CT against dCT (Figure 5).

Results and Discussion

The first validation was carried out for two out of 16 patients, and the pixel match rate for each patient was as shown in Table 1. For the test patient 1, a maximum of 80.3%, at least 67.9%, and an average of 76.4% match rate were found in 72 images. Test Patient 2 had a maximum match rate of 79%, minimum 60.1% and average 73.5% in 64 images.

We then analyzed the pixel match rate of the synthetic CT for all 16 patients. Average pixel match rate was 68.7%, standard error 0.54%, median value 69.8%, and the standard deviation was 4.4%. The modal match rate was around 70% (range 53.7–74.9%). The boxplot for the pixel match rate is shown in Figure 6.

The MR guided radiotherapy with daily adaptive treatment enables physicians to observe tumor reduction in real-time. This in return, leads to focusing treatment on the remaining tumors while minimizing exposure to the surrounding organs [10–13]. In the case of head and neck cancer, the tumor size dramatically changes during treatment, and real time adaptive MR guided radiotherapy can further reduce normal tissue dose, which would eventually decrease adverse events. It has been reported that the patient reported pain is reduced, while tumor control was maintained [14]. In this respect, MR guided radiotherapy is the latest treatment technique capable of real-time verification of volumetric dose distribution. But there is a limit to the deformable registration algorithm due to the volume changes during image transformation process, which could then lead to inaccurate calculation of radiation dose distribution. Although quality assurance methods have been proposed to overcome these dose calculation errors, ultimate solutions require the acquisition of CT image, simultaneously. However, acquiring both CT and MR image simultaneously is currently not feasible. Furthermore, repeated CT would result in increased radiation exposure to the patient. Thus, generation of synthetic CT from real-time MR image, and not a deformed image from the baseline CT would be a superior alternative method [15]. As mentioned above, currently available real time adaptive plan could not calculate accurate radiation dose due to volume changes made during deformable registration process [4]. In this study, we constructed and tested a synthetic CT generation algorithm from MR image acquired during treatment using deep learning method. Independent training and validation with larger cohort for multiple sites is currently underway.

U-net model was chosen as a deep learning method as it is much less time-consuming compared to the atlas based manual segmentation method. Besides, the atlas based manual matching is not easily applied in the analysis after the training set. Further, U-net model would have a better potential for future development of synthetic CT generation algorithms. Recently, a deep convolutional neural network method for synthetic CT generation has been presented [5]. Notable mean absolute errors and results were presented, as well as significant results compared to deformable atlas registration and patch-based atlas fusion.

As already mentioned, it is expected that more accurate dose calculation would be possible through the development of synthetic CT generation algorithm. In this study, the overall match rate was 60–70% and it is expected that the accuracy and analytical power will improve through analysis using more input data. To increase the match rate for dCT, Five -level division by Hounsfield Unit was used instead of dCT, itself in the current study. But it is requisite to review the correct classification manually as boundaries may be quite ambiguous. Precise boundary segmentation between organs and tissues is an important part to increase the match rate. Image review for the appropriateness of applied segmentation in the training set by physician or trained researcher would further improve accuracy. However, it is difficult to set exact segmentation points, and classification by physician or trained researcher is cumbersome and requires a great deal of research resources. Based on the result of this preliminary study, the match rate of transformation between bone and air was low in deep learning based synthetic CT generation. When using synthetic CT in radiotherapy, we need a model that can best classify the bone and air from other tissues, which are more or less water equivalent in terms of electron density. If the synthetic CT generation algorithm model can accurately distinguish bone and air, we could further reduce the error in the radiation treatment planning. With these results, we will be able to predict delivered radiation doses more accurately.

Three areas requiring further exploitation was revealed through this study. First, we should evaluate the feasibility of MR image integration and fit of required MR image modification for real-time MR image guided radiotherapy. Secondly, further refinement and tuning is required for automated segmentation procedure of dCT using Hounsfield unit, especially for bone and air. Lastly, the reliability of generated dCT to be used should be given second thought as dCT cannot be free from all the aforementioned pitfalls. Thus using dCT as gold standard could set bars on the ability of algorithm in training.

Significance of this study is that though preliminary in design, this work has shown the possibility of deep learning based synthetic CT generation by evaluation of image characteristics of MR and CT. Capturing the difference of image characteristics between bone, air and other tissues is an important part of synthetic CT generation.

Conclusion

From the acquired MR and CT images, we developed and evaluated the deep learning model for efficient synthetic CT generation. Algorithm optimization will be done through the process of synthetic CT generation and evaluation of different body parts using deep learning model with additional data from more patients. In the future, it is expected that the MR image database will be built for various parts of the body, such as abdomen, thorax, and pelvis. Next step of the research would focus on demonstrating the feasibility of adapting the deep learning model to the rest of the body area beyond thorax.